If linear regression was covered in my college linear algebra class, it was covered only very briefly. Much can be said on

the subject. This is post #5 in a series discussing the expression (X'X)

-1X'Y used in linear regression. Previous posts are here:

#1,

#2,

#3,

#4.

I'm grateful for the availability of

Gilbert Strang's M.I.T. 18.06 lectures on video. Many thanks to both M.I.T. and Professor Strang. One of the most illuminating aspects of Strang's lectures are the different perspectives offered. He gives a masterful introduction to the properties of matrices and the associated operations from multiple perspectives: geometry, row space, column space, etc.

Seeing matrices from the various perspectives is really important, I believe. One of the things that tripped me up in my understanding of the use of matrices in linear regression was a natural inclination towards focusing strictly on row space.

Let me elaborate.

Generally, the times you find yourself in need of regression analysis look like this. There's some response surface you'd like to model. The dimensionality of the space is defined by independent variables over which you have some control, and you can sample this space by measuring some dependent response at selected points.

Following standard conventions, each point in the sample space becomes a row in a matrix typically labeled 'X' (the independent variables), and each sampled response becomes a corresponding row of another matrix typically a vector labeled 'Y' (the dependent variable(s)).

An example should do wonders. Let's consider a case using pixels on a computer screen (for the sake of simplicity assume these are greyscale pixels with an intensity from 0 to 255). The two-dimensional space of the computer screen will be sampled at various points, with the top-left pixel located at (0,0) and coordinates increasing rightward and downward.

Let's say we sample the pixels at (1,0) and (0,2) and get intensity values of 10 and 40, respectively:

We'll fit these two points with a simple linear model:

intensity = x * B

0 + y * B

1Pixel (1,0) has an intensity of 10 and pixel (0,2) has an intensity of 40, which gives us the following two equations:

1*B

0 + 0*B

1 = 10

0*B

0 + 2*B

1 = 40

The solution to the system is obvious. Both constraints are met when B

0 is 10 and B

1 is 20.

In matrix form:

X*B = Y

Explicitly:

The system is solved by multiplying both sides by the inverse of X:

Giving the solution:

When you multiply the first row of X (the first sample point) by the coefficient vector (B), you the get the first row of vector Y; when you multiply the second row of X by the coefficient vector (B), you get the second row of Y.

The relationship between our matrices and the real world is evident. We're sampling points in a 2D space, the screen. Each point sampled corresponds to a row of the X matrix, and each sampled intensity has a corresponding row in Y.

In retrospect, an obstacle to my own understanding was the clear correspondence between the sample space in reality and the rows of the matrices. The relationship is very conducive to thinking of points in space strictly in terms of matrix rows. Unfortunately, this isn't the space in which the least squares solution exists. The rows of X are the wrong space to focus on.

"Huh? Whaddya mean it's the wrong space. It's the only space there is!"

Nope, there's another space. The rows of X are indeed points selected in your sample space (computer screen coordinates), but if you want to find the least squares solution, you need to consider the case in which the

columns of X are points in space; as opposed to the "row space" of X, this is another space called the "column space."

Unlike the rows, the columns of X don't correspond directly to our real world sample space (i.e, the computer screen). Even so, the column space is where the least squares solution lives. If that seems counterintuitive and it leaves you scratching your head, don't feel alone.

I'll do my best to explain.

Let's add another pixel intensity sample to this example, except this time we'll rig the numbers so we maintain a perfect fit. We'll say the intensity at pixel (2,2) is 60, because that's what the equation we calculated above will predict: (2*B

0 + 2*B

1)= (2*10 + 2*20) = 60.

In terms of matrices, we now have three sample points in the rows of X and three intensities in the rows of Y.

Now that we have three sample points and two unknowns, we have a typical situation in which the system is overconstrained; that is, we have more constraints than unknowns, and usually there won't be a perfect solution to the system. (There's a solution for this case because we cheated in adding the fake sample with a value of 60.)

As far as matrix algebra goes, now that we've added another point, our X matrix is no longer square (it's 3 x 2), so we can no longer invert it to find a solution to the system.

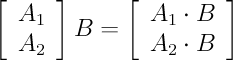

Multiplying X by B gives us the following matrix-vector product:

The result is shown in what's referred to as the "row view" of a matrix-vector product. Thanks to the distributive property, we can factor B

0 and B

1 out of the result to get "the column view" of the matrix-vector product:

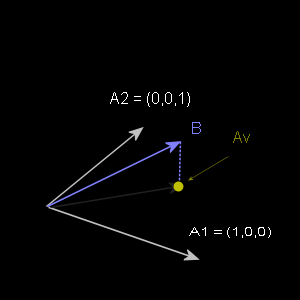

If you're unfamiliar with "the column view," I recommend researching it bit, because it offers valuable mathematical insights. The short answer is that the product of a matrix and a vector is a linear combination of the matrix columns. It's the first column of the matrix multiplied by the first vector component plus the second column of the matrix multiplied by the second vector component and so on. (Matrices project vectors is into their own column space.)

We can reformulate our problem in terms of the column view:

Previously we were looking at a problem involving three 2D points (pixel coordinates) and three 1D responses (pixel intensities). This is the space where our real world problem lives. Now, we've transformed this problem into one involving two 3D points and one 3D response. This is the space in which our solution lives.

Recently, I made a comment about using an Etch-A-Sketch to demonstrate linear algebra. Here's another way of asking the question: If we have an Etch-A-Sketch with two knobs, B

0 and B

1, and the first knob goes the direction (1, 0, 2) and the second knob goes the direction (0, 2, 2), is it possible to turn the knobs in such a way that you wind up at the point (10, 40, 60)? And, if so, how much do you have to turn the knobs? (We know the answer is B

0=10 and B

1=20.)

If the point closest to (10, 40, 60) lies on the surface of our imaginary Etch-A-Sketch, we have a perfect fit (which we do with our current example because we cheated). If the point is floating above or below the surface of our imaginary Etch-A-Sketch, the best we can do is move the pen to the closest possible point on the Etch-A-Sketch surface (which is what least squares does). We know how to find the solution to this problem, because we figured it out in

the last post where we used linear algebra to find the projection of a vector onto a plane.

Let's spend a little time mapping what we did here to what we did there. In the preceding example, we wound up with a problem involving three points on a computer screen and three pixel intensity values. These pixel coordinates became rows in our X matrix, the unknowns became the B vector and the pixel intensity values formed the Y vector.

Next we went from the "row view" of the problem to the "column view" of the problem--a move which took us from our real world problem space to what I'm calling the "solution space." (I think it helps to think of the problem living in one world and the solution living in another.)

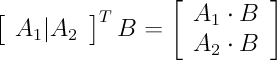

The column view formulation of the problem looks at the solution space and asks what combination of the two 3D vectors produces the vector Y, the one containing our three sampled intensities; and if no combination equals Y, what combination gets the closest to Y? (i.e., least squares) In other words, what is the projection of vector Y on the plane defined by all possible combinations of the two 3D vectors appearing in "the column view" of our problem?

In the context of this post* the vector B contains our unknowns. These are the coefficients we are looking to find. They will give us the best fit coefficients for predicting pixel intensities on the screen in our real world problem AND they will also give us the coefficients for the linear combination of column vectors that gets us closest to the Y vector in our "column view" solution space.

The 3D point closest to the Y vector (i.e., the projection of Y onto the column space of X) is the product X*B. In order to find the answer, we need to determine B. It may help to see the matrix product X*B, its row view and its column view again:

In order to find the solution, we need the vector from XB to Y perpendicular to both columns of X. This will give us the projection of Y onto the plane defined by linear combinations of the two columns of X.

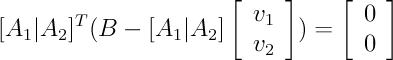

The vector from XB to Y is (Y-XB). If we transpose X and multiply by (Y-XB), the result will be a two component vector containing the dot products of the columns of X with the vector from XB to Y (the point in the plane to Y). Since we want the plane perpendicular to the vector from XB to Y, the dot products must be zero. This gives us the system we need to solve:

X'(Y-XB) = [0 0]'

Now, as before, we can find the solution using matrix algebra.

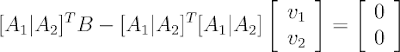

distributive property:

X'Y - X'XB = [0 0]'

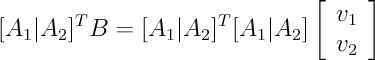

add X'XB to both sides:

X'Y = X'XB

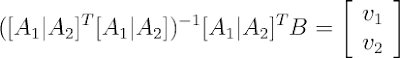

and, finally, multiply both sides by the inverse of X'X:

(X'X)-1X'Y = B

I feel like breathing a sigh of relief, because getting here was the point of this series of posts. The original question asked what's the deal with the expression (X'X)-1X'Y used in linear regression. Hopefully, it makes more sense now. :) It's all about finding the projection of Y on the column space of the X matrix.

(Exercise for the reader. Try this in Excel, Matlab or Scilab to verify that this gives the previously calculated values, 10 and 20.)

Comments:

In this example, I simply sampled three points in a 2D space to demonstrate the differences in dimensionality of the sample space (2D) and the solution space (3D) and how they're connected to the matrix. If I'd sampled four pixels, note that we would have been working with 4D vectors and projections in the solution space. If I'd sampled five pixels, the solution space would have involved 5D vectors, and so the dimensionality vectors in the solution space increases with each additional pixel sampled.